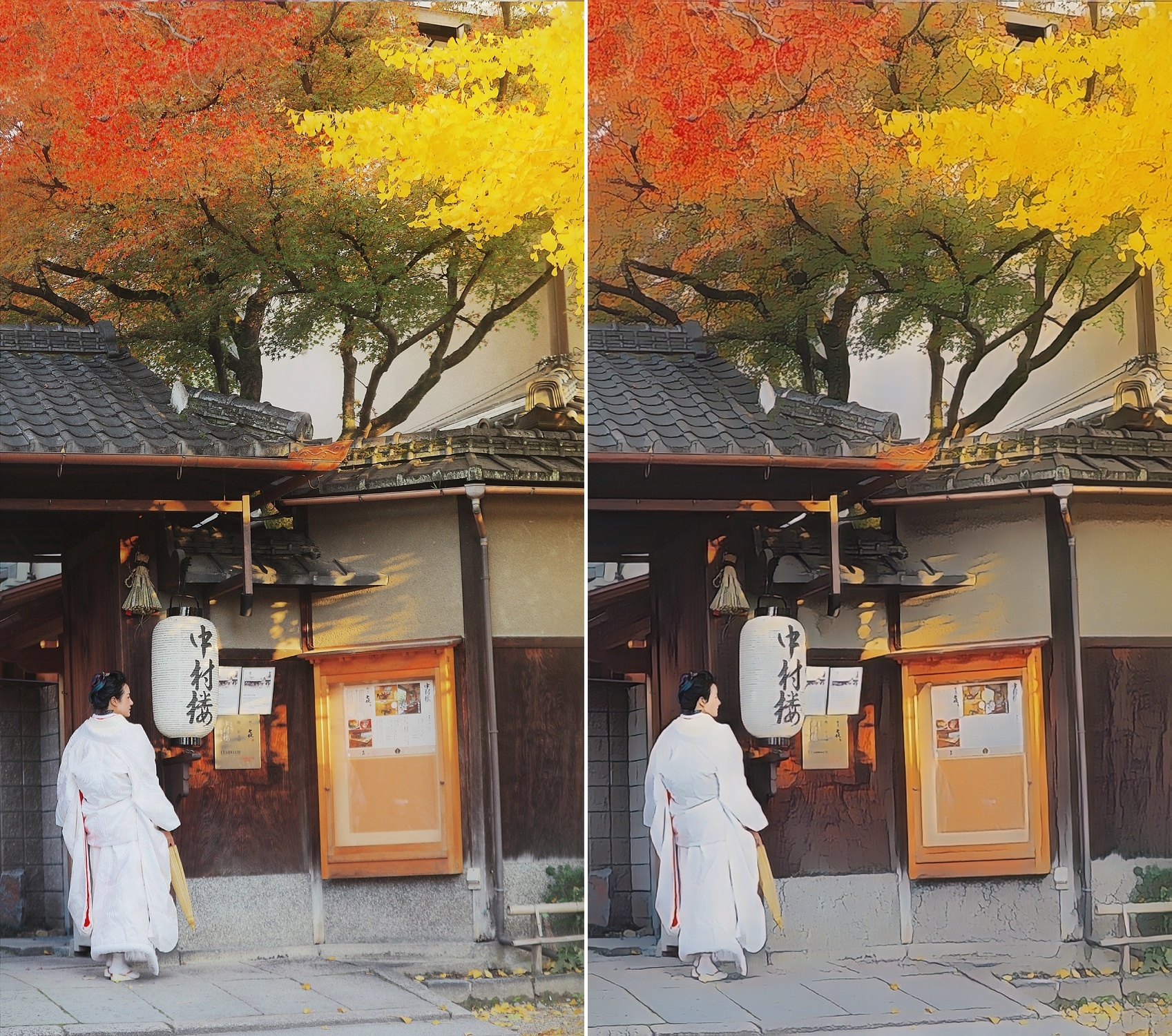

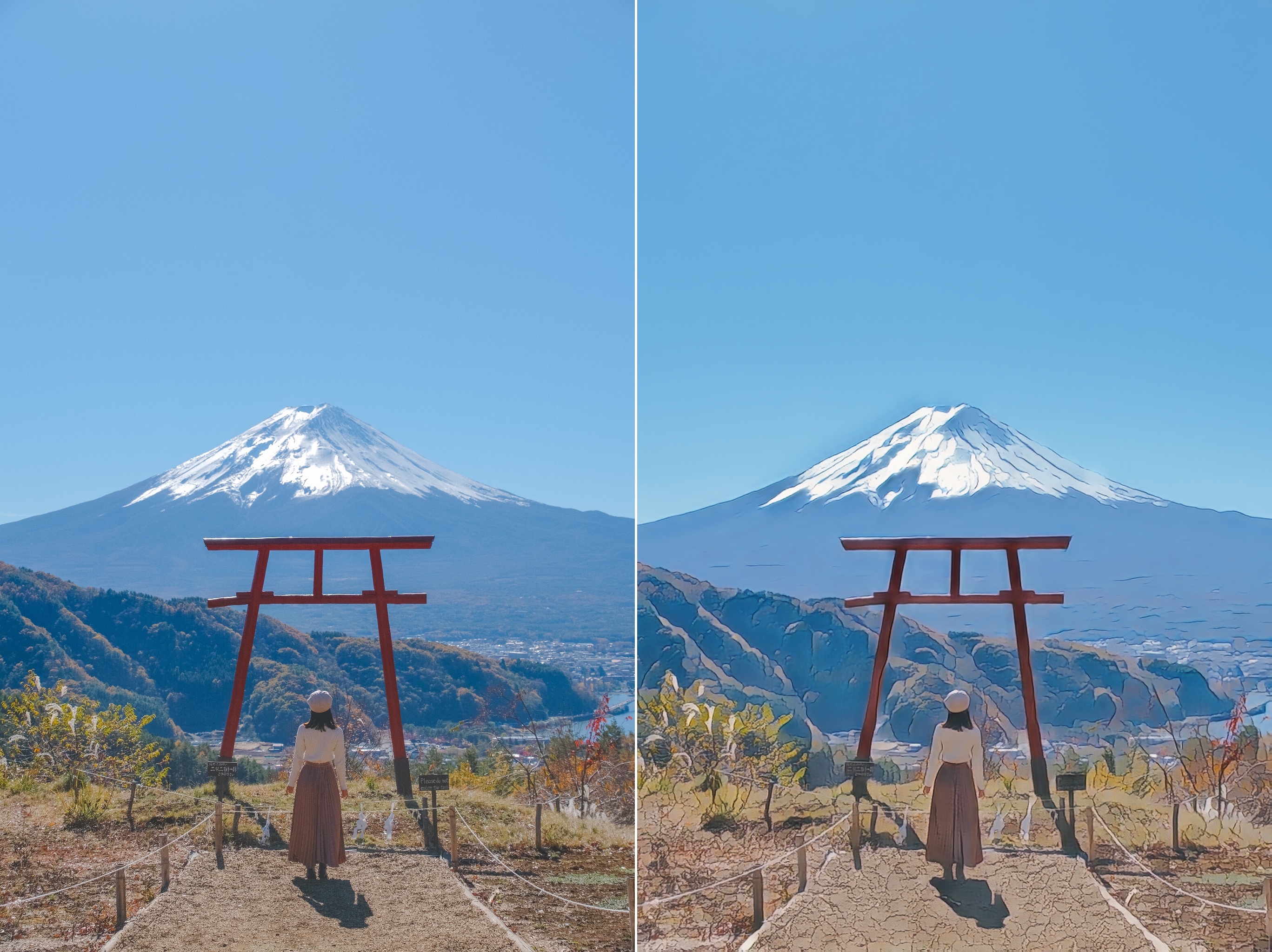

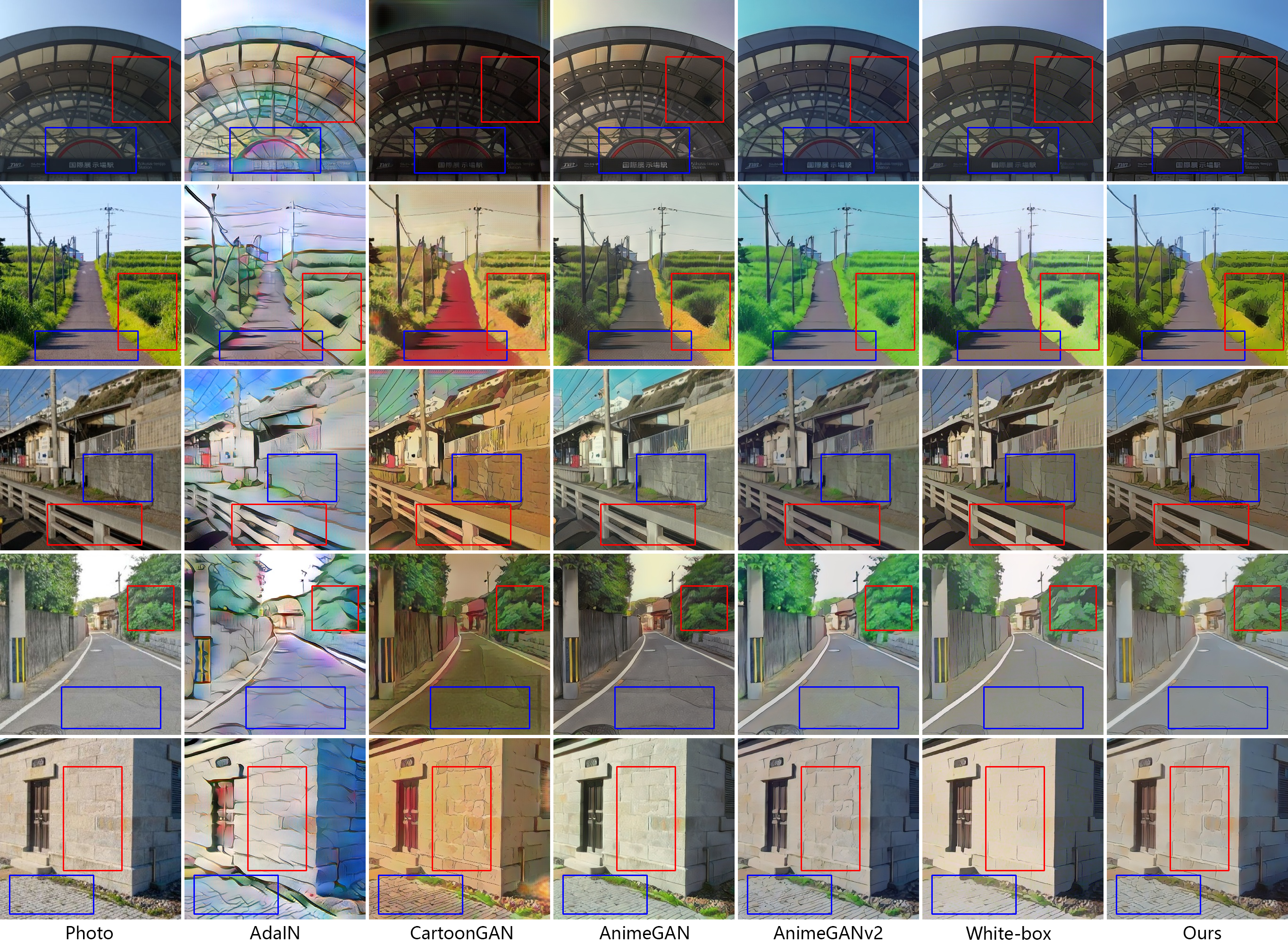

Photo animation is to transform photos of real-world scenes into anime style images, which is a challenging task in AIGC (AI Generated Content). Although previous methods have achieved promising results, they often introduce noticeable artifacts or distortions.

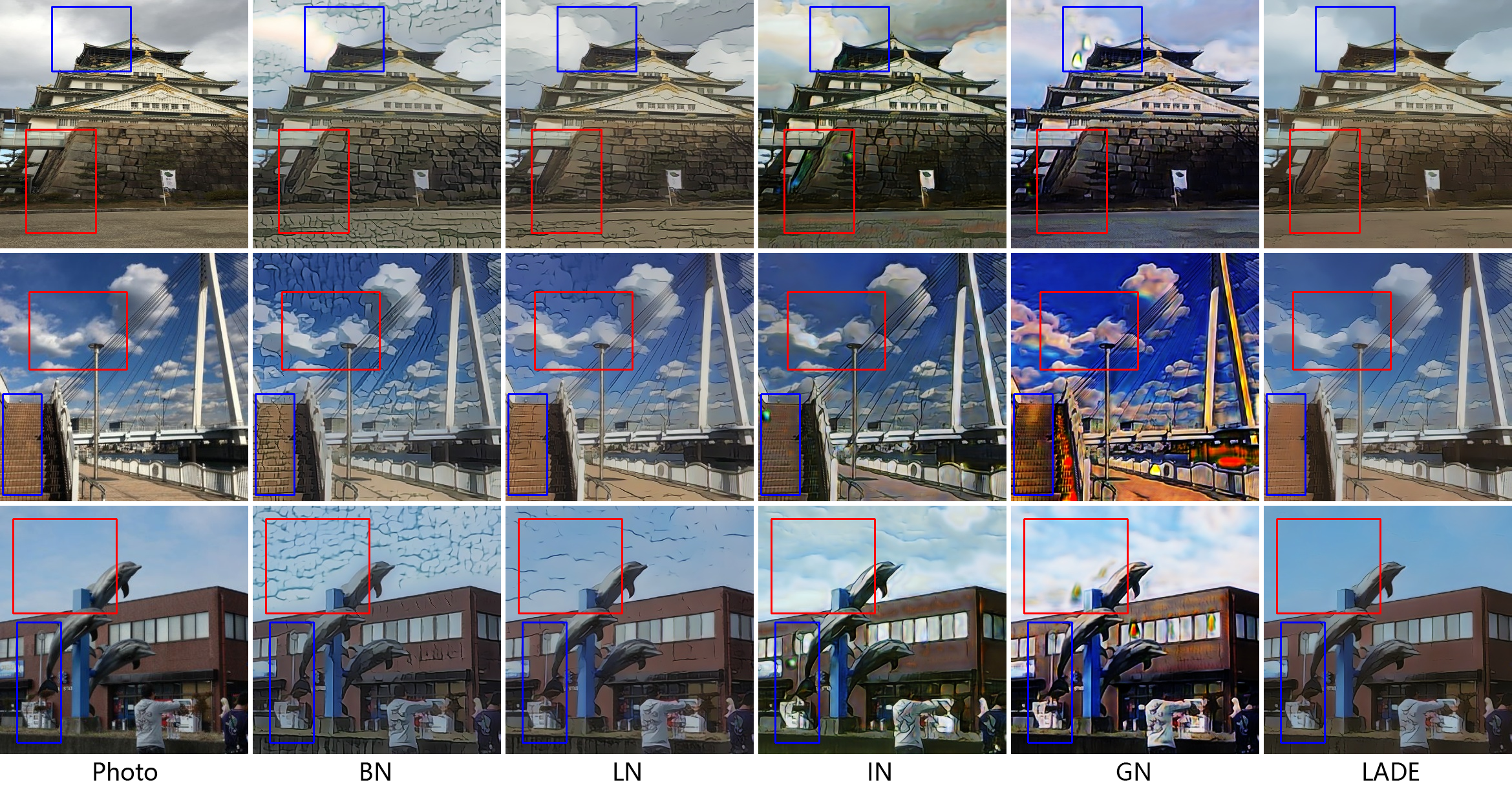

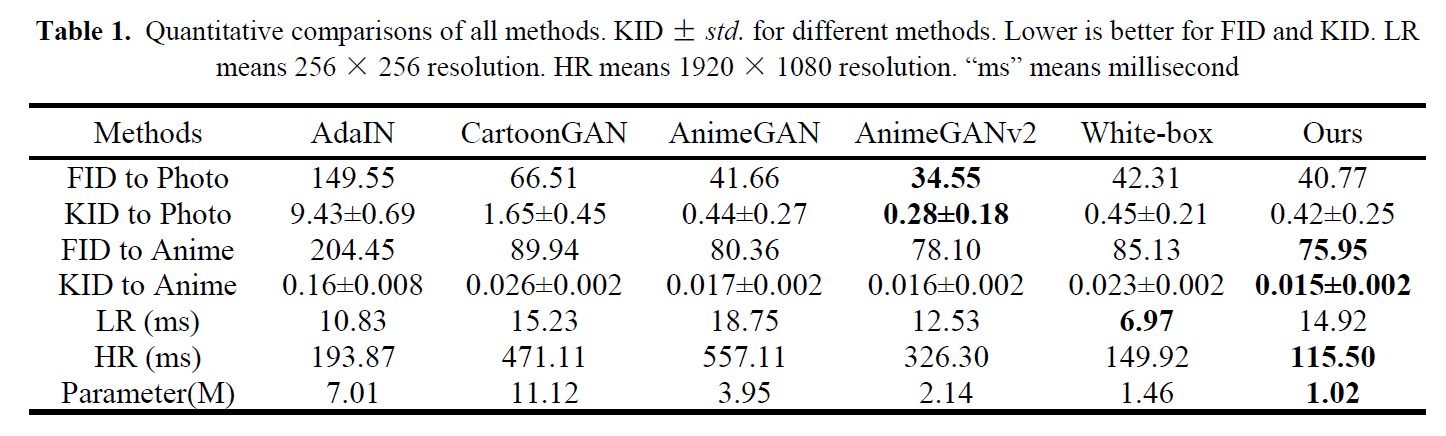

In this paper, we propose a novel double-tail generative adversarial network (DTGAN) for fast photo animation. DTGAN is the third version of the AnimeGAN series. Therefore, DTGAN is also called AnimeGANv3. The generator of DTGAN has two output tails, a support tail for outputting coarse-grained anime style images and a main tail for refining coarse-grained anime style images. In DTGAN, we propose a novel learnable normalization technique, termed as linearly adaptive denormalization (LADE), to prevent artifacts in the generated images. In order to improve the visual quality of the generated anime style images, two novel loss functions suitable for photo animation are proposed: 1) the region smoothing loss function, which is used to weaken the texture details of the generated images to achieve anime effects with abstract details; 2) the fine-grained revision loss function, which is used to eliminate artifacts and noise in the generated anime style image while preserving clear edges. Furthermore, the generator of DTGAN is a lightweight generator framework with only 1.02 million parameters in the inference phase. The proposed DTGAN can be easily end-to-end trained with unpaired training data.

Extensive experiments have been conducted to qualitatively and quantitatively demonstrate that our method can produce high-quality anime style images from real-world photos and perform better than the state-of-the-art models.